Azure Developer Community Blog > Getting Started – Generative AI with Phi-3-mini: A Guide to Inference and Deployment

Or maybe you were still paying attention to the Meta Llama 3 released last week, but today Microsoft did something different and released a new Phi-3 series of models. The first wave of releases on Hugging face is the Phi-3-mini version with a parameter size of 3.8B. Phi-3-mini can not only run on traditional computing devices, but also on edge devices such as mobile devices and IoT devices. The Phi-3-mini release covers the traditional Pytorch model format, the quantized version of the gguf format, and the onnx-based quantized version. This also brings convenience to developers in different application scenarios. The content of this blog hopes to allow different developers to explore different model formats released by Phi-3-mini combined with different technical frameworks, so that everyone can inference Phi-3-mini first.

Use Semantic Kernel to access Phi-3-mini

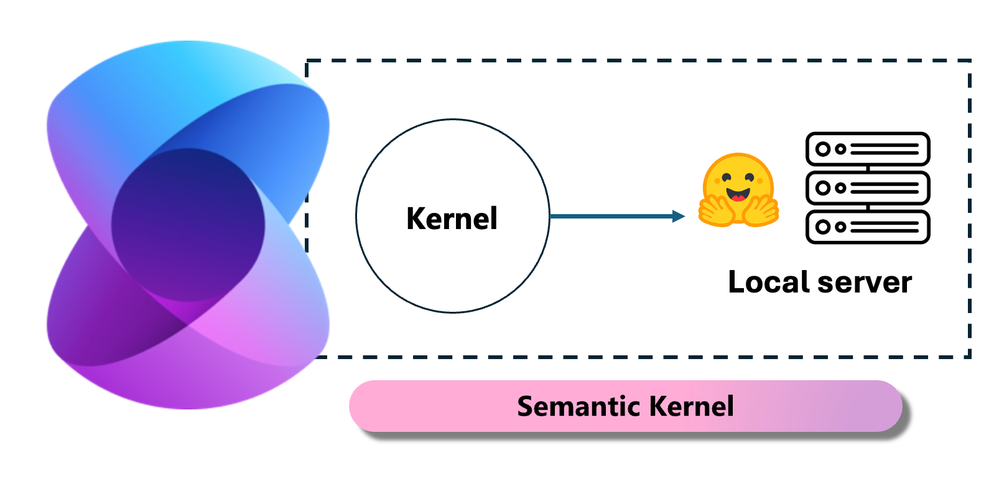

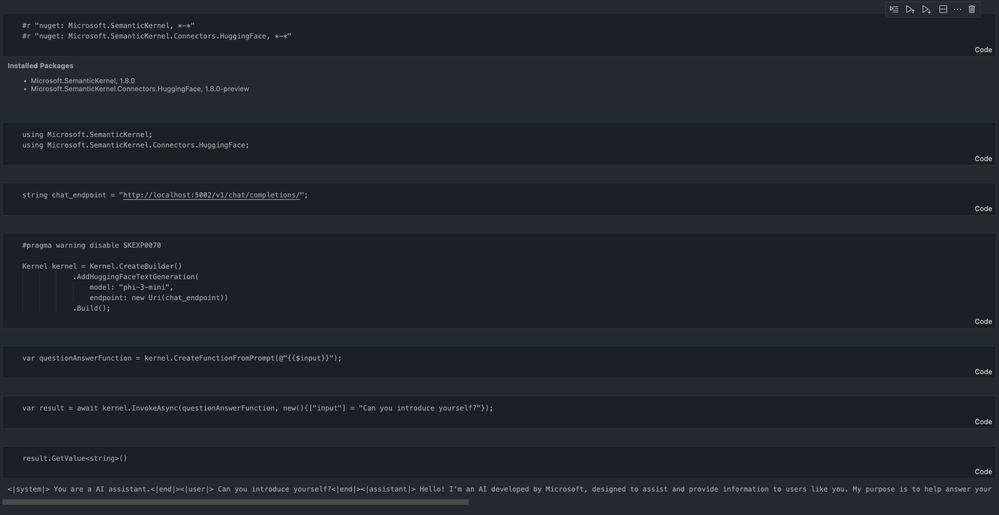

In the Copilot application, we create applications through Semantic Kernel / LangChain. This type of application framework is generally compatible with Azure OpenAI Service / OpenAI models, and can also support open source models on Hugging face and local models. What should we do if we want to use Semantic Kernel to access Phi-3-mini? Using .NET as an example, we can combine it with the Hugging face Connector in Semantic Kernel. By default, it can correspond to the model id on Hugging face (the first time you use it, the model will be downloaded from Hugging face, which takes a long time). You can also connect to the built local service. Compared with the two, I recommend using the latter because it has a higher degree of autonomy, especially in enterprise applications.

From the figure accessing local services through Semantic Kernel can easily connect to the self-built Phi-3-mini model server. Here is the running result.

Sample Code https://github.com/Azure-Samples/Phi-3MiniSamples/tree/main/semantickernel

Call quantized models with Ollama or LlamaEdge

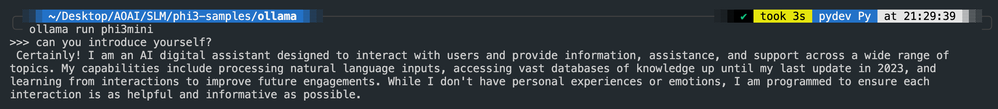

More users prefer to use quantized models to run models locally. Through Ollama/LM Studio, individual users can call different quantized models at will. I will first show how to use Ollama to call the Phi-3-mini quantization model

- Create a Modelfile

FROM {Add your gguf file path}

TEMPLATE “””<|user|>

{{.Prompt}}<|end|>

<|assistant|>”””

PARAMETER stop <|end|>

PARAMETER num_ctx 4096

- Running in terminal

ollama create phi3mini -f Modelfile

ollama run phi3mini

Here is the running result.

Sample Code https://github.com/Azure-Samples/Phi-3MiniSamples/tree/main/ollama

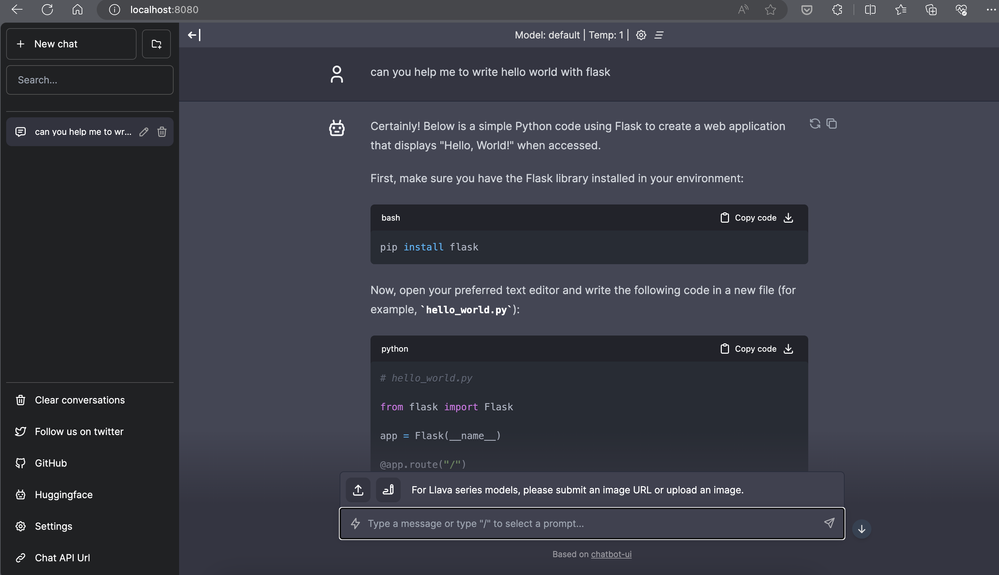

I have always been a supporter of cross-platform applications. If you want to use gguf in the cloud and edge devices at the same time, LlamaEdge can be your choice. LlamaEdge can be understood as WasmEdge (WasmEdge is a lightweight, high-performance, scalable WebAssembly runtime suitable for cloud native, edge and decentralized applications. It supports serverless applications, embedded functions, microservices, smart contracts and IoT devices. You can deploy gguf’s quantitative model to edge devices and the cloud through LlamaEdge.

Here are the steps to use

- Install and download related libraries and files.

curl -sSf https://raw.githubusercontent.com/WasmEdge/WasmEdge/master/utils/install.sh | bash -s — –plugin wasi_nn-ggml

curl -LO https://github.com/LlamaEdge/LlamaEdge/releases/latest/download/llama-api-server.wasm

curl -LO https://github.com/LlamaEdge/chatbot-ui/releases/latest/download/chatbot-ui.tar.gz

tar xzf chatbot-ui.tar.gz

Note: llama-api-server.wasm and chatbot-ui need to be in the same directory

- Run scripts in terminal

wasmedge —dir .:. –nn-preload default:GGML:AUTO:{Your gguf path} llama-api-server.wasm -p phi-3-chat

Here is the running result.

Sample code https://github.com/Azure-Samples/Phi-3MiniSamples/tree/main/wasm

Run an ONNX quantized model

ONNX Runtime is an efficient runtime library for ONNX models. It supports multiple operating systems and hardware platforms, including CPU, GPU, etc. The key advantages of ONNX Runtime are its efficient performance and ease of deployment. Through ONNX Runtime, developers can easily deploy trained models to production environments without caring about the underlying inference framework. In the era of large models, ONNX Runtime has released an interface (Python/.NET/C/C++) based on generative AI. We can use this interface to call the Phi-3-mini model. Next we try to call the Phi-3-mini model through Python (when using ONNX Runtime Generative AI, you need to compile the environment first, please refer to https://github.com/microsoft/onnxruntime-genai/blob/main/examples/python/phi-3-tutorial.md)

Sample Code https://github.com/Azure-Samples/Phi-3MiniSamples/tree/main/onnx

Summary

The release of Phi-3-mini allows individuals and enterprises to deploy SLM on different hardware devices, especially mobile devices and industrial IoT devices that can complete simple intelligent tasks under limited computing power. Combining LLMs can open up a new era of Generative AI. Inference is only the first step. I hope you will continue to pay attention to the content of this series.

Resources

Phi-3 technical report https://aka.ms/phi3-tech-report

Learn about ONNX Runtime Generative AI https://github.com/microsoft/onnxruntime-genai

Learn about Semantic Kernel https://aka.ms/SemanticKernel

Read Semantic Kernel Cookbook https://aka.ms/SemanticKernelCookBook

Learn about LlamaEdge https://github.com/LlamaEdge/LlamaEdge

Learn about Ollama https://ollama.com/